I HEAR A PERCUSSIVE THUD. SOMETHING IS HITTING THE FLOOR IN FRONT OF ME REPEATEDLY. It’s reverberating (I’m in a large room, I guess) and the rhythm is punctuated by frenzied bursts of high-pitched squeaks nearby. In the distance, I hear shuffling and the murmur of voices.

I open my eyes. I’m standing in a gymnasium just a few feet behind the basket. In the key in front of me is LeBron James [ABOVE, JUMPING], wearing black-and-red Nike gear from head to toe: I count at least four swooshes. I suppose it’s a cliché to say that a pro basketball player looks taller in real life, but my courtside view makes his two metres seem extra impressive. Indifferent to my presence, King James casually dribbles, leaps towards me, and executes a highlight-reel-worthy dunk.

This is not my first time using virtual reality (V.R.), but it is my first time watching an NBA star practise. I’m “in” Striving for Greatness, a V.R. film directed by Félix Lajeunesse and Paul Raphaël of Montreal’s Felix & Paul Studios and coproduced with James’ sports media company Uninterrupted. I get comfortable in my chair and watch James grind out an intense fitness regime of yoga, weightlifting, and scrimmages, in preparation for his recent championship season. I can see the specular reflection on individual beads of sweat. But I’m not here to evaluate photorealism. From time to time, I close my eyes during the film—or “experience,” as V.R. works are often termed—because I’m trying to understand how its sound design contributes to my sense of immersion. What role do the artists working in this emerging medium think that sound plays? Is sound central to V.R. worldbuilding, or is it incidental?

TWENTY SIXTEEN IS YEAR ZERO FOR VIRTUAL REALITY. HTC’s Vive, Oculus’ Rift, and Samsung’s Gear headsets were all released this year, and now Sony is entering the fray. Adoption of consumer headsets is modest thus far, but interest is high. For better or for worse, V.R. will, it appears, soon become a fixture in living rooms and alongside computers. Strapping HD displays onto our faces is something we do now.

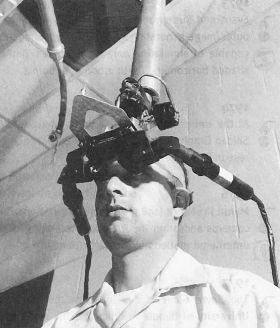

The three pillars of immersion—stereoscopic 3-D imagery, a wide field of view, and low-latency head tracking—were all crudely implemented in a clunky prototype created by computer graphics pioneer Ivan Sutherland and his student Bob Sproull at MIT's Lincoln Laboratory in 1968. Like many technologies that are now ubiquitous, V.R. was nurtured in military, aerospace, and academic contexts in the 1970s and ’80s. Since it was prohibitively expensive, it was only deployed in high-stakes scenarios like astronaut training or flight simulation—where lives or tens of millions of dollars of equipment were at risk. It was the hundreds of millions of lightweight, high-definition displays required by the smartphone industry that tipped the balance to make consumer V.R. viable.

In fall 2012, nineteen-year-old Palmer Luckey pitched the Oculus Rift V.R. headset to game developers on the crowd-funding platform Kickstarter, offering backers an early build of the Rift and access to the Oculus software-development kit, and raised $2.4 million in pledges. The company grew quickly, and in spring 2014 was acquired by Facebook for $2 billion. In August 2014, 3-D-graphics legend John Carmack (formerly lead programmer of the Doom and Quake videogame series) signed on as Oculus’ chief technology officer, noting on the Oculus blog that everyone working in V.R. today “is a pioneer. The paradigms that everyone will take for granted in the future are being figured out today; probably by people reading this message.”

“Two-dimensional audio has always been kind of frustrating, and that’s the reason 5.1 and 6.1 [surround] sound never really caught on—they are basically planar audio.” Jean-Pascal Beaudoin, the director of sound technology at Felix & Paul Studios and a cofounder of its spin-off, Headspace Studio, is on the phone explaining the myriad technical hurdles in V.R. that are only tangentially related to optics. Time-honoured approaches to mixing surround sound don’t cut it in V.R. “Just put eighty per cent of the content in the left, right, and centre channels, and sugar it a bit in the rear,” he says sardonically. “It’s just glorified stereo.”

He’s right, of course. But his comments on the spatialization of sound are rooted in specific listening environments and media formats. In the 1960s, when music producers like Brian Wilson and “wall of sound” architect Phil Spector were still mixing in mono because of the control it afforded them, stereo was on its way to becoming the dominant format. In the 1980s, Dolby Stereo and Dolby Surround brought living-room and movie listeners into a rudimentary four-channel matrix (left, right, centre, rear), which French composer and film-sound theorist Michel Chion eloquently described in his 1990 book Audio-Vision: Sound on Screen as “a space with fluid borders, a sort of superscreen enveloping the screen.” Dolby’s current surround platform, Dolby Atmos, features audio mixed to a 7.1.4 format that relies not only on side, centre, and rear speakers, but overhead ones as well.

But the spatialization of sound in a living room or movie theatre is a far cry from how sound needs to work to be effective in V.R.. “You have to capture a full sphere in order to help you localize sound sources,” Beaudoin tells me. “It’s the primary factor in creating immersion, because our brains process sound faster than image. If we were in V.R. and you were standing in front of me and I was talking to you and you turned your head ninety degrees to the right, the way you hear me with your left ear versus your right is suddenly very different.” If it isn’t, the immersion is broken. Sound is that important in the medium.

I think back to the visceral experience of that V.R. film Striving for Greatness—hearing rubber bouncing on hardwood, feeling the backboard shudder under James’ authoritative dunk. “There are different resolutions we can use for capturing spherical audio, and the most common one—which is beginning to resemble a standard—is first-order ambisonic,” Beaudoin says. Unlike recordings made using other multichannel formats, ambisonic recordings contain a speaker-independent representation of a sound field referred to as B-format, which can be mapped to any surround configuration when played back. Developed by British academics in the early 1970s, the ambisonic full-sphere surround-sound technique had limited appeal outside audiophile, field-recording, and research applications until very recently. Its flexibility is perfect for headphone-centric V.R. experiences, where the location and orientation of the listener relative to sound sources is crucial. “There’s already a handful of microphones that allow you to capture first-order [ambisonic sound],” Beaudoin says. (TetraMic and SoundField microphones have long been been available, while Sennheiser’s AMBEO VR Mic has just come out.) Because these four-channel assemblies record everything in a scene, says Beaudoin, the traditional film-sound playbook stills applies in mixing V.R. films. In Striving for Greatness, LeBron, his trainer, and other characters were also recorded with standard mikes; then, in post-production, these recordings were situated and mixed within the master ambisonic recording. “You have to spatialize the characters’ trajectory as they are moving—azimuth, distance, and elevation. So it’s a lot of work.”

In addition to voice and sound effects, music requires careful consideration in V.R. Music can be in a scene in V.R., occurring within its 3-D environment or on top of it (score music). “So you start the experience with Drake’s track ‘Back To Back’—it’s in stereo—and you’re on a boat . . . suddenly you are in front of LeBron’s house and he is doing yoga, and then you arrive in the gym. The music is not stereo anymore, it is playing in the gym. And later, when you are in his SUV with his son Bronny, the track that is playing is nondiegetic. Then next thing you know, the same track—it’s the same flow, it’s continuing, but it’s playing in the SUV stereo,” he says excitedly. “That’s the kind of tactic we can employ to deliver a high level of immersion and also support storytelling arcs.”

NOT ALL V.R. IS DOCUMENTARY, OF COURSE: DIFFERENT NARRATIVE OF EXPERIENTIAL GOALS INSPIRE PARTICULAR APPROACHES TO HANDLING SOUND. Last spring,

Danish artist Louise Foo [RIGHT] spoke at an

event called COMPOSITE, held in software company Autodesk’s chic open-concept office in Montreal’s Cité du Multimédia neighbourhood. Foo capped her talk by playing a video of Lake Louise, a synesthetic V.R. experience she’s been working on for the last several months. It starts with a simulation of the viewer floating above the surface of a large body of water, with a few islands visible on the horizon. Layers of Foo’s ethereal vocals permeate the monochromatic nautical scene, and deltohedrons spin above the water; when the viewer focuses their gaze on the floating forms, new samples trigger. “The vocals are the only thing that is consistent,” says Foo. “You create a remix of the song by looking around. If you look down into the water, when you look up it’s a new world with new sounds.” Tilting your head changes the pitch of vocals—how you see is what shapes your experience of the song. In another sequence, a mass of columnar forms protrude into the sky, rising and falling with the frequencies. A dub-like “plink” echoes low in the mix, as if it were emanating from a damp, cavernous space; perhaps the columns are stalagmites. “No matter what I seem to want from you,” she sings, “don’t let it get to your head.”

Playing with the distinction between appearance and reality, Foo’s sonic landscape fuses sight and sound. Craning one’s neck is a gesture we employ to get a better look at something, Foo uses V.R. to ask, “What if where we look affects what we hear? What if how we look mutates melody?” These intriguing questions are not new to Foo, who is a member of the pop-electronica trio Giana Factory, and who maintains a parallel career as a visual artist.

Fresh from two weeks of recording in a seaside wooden cabin near the Danish town of Hundested, Foo tells me about the curiosity driving her art practice. “It’s to learn about sound. I don’t have classical training, I don’t know how to read or write sheet music, so I’m trying to understand sound from a different angle.” A graduate of NYU’s Interactive Telecommunications Program, Foo has created visualizations of common audio effects such as reverb and delay as blotchy screenprints and has created a tangible, colourful tonewheel interface for creating musical loops. Spurred by her excitement about the lack of material constraints in V.R., Foo worked with programmer Kenny Lozowski to create a prototype of Lake Louise in March 2016 at the Banff Centre for Arts and Creativity’s inaugural Convergence Residency (initiated by Banff and MUTEK), which is now in ongoing development in collaboration with NYC-based media artist Sarah Rothberg. Foo tells me the project is an arena where “any sound could be any object, or any visual aspect.” This untethered freedom is a far cry from the location and budgetary constraints of music video production. Given Foo’s experience directing videos for Giana Factory, I asked her if she thinks Lake Louise and recent V.R. projects by recording artists Björk, Ash Koosha, and Run the Jewels may represent the future of music videos. “I think it could definitely be an extension of a music video as we know it,” she says cautiously. “But I don’t know—at this point Lake Louise is really just an experiment; it’s a mix between a song and a music video that becomes a new kind of composition.” While Foo is searching for new ways to represent, experience, and interact with songs, most V.R. projects are not so explicitly about listening; sometimes the role that sound plays is more insidious.

“IT'S ABOUT MORE THAN VERISIMILITUDE: it’s about finding ways to create coherences between the way an environment is represented, the way it feels, and the atmosphere its soundscape creates,” says Alexander Porter, director of photography for Brooklyn-based immersive-media studio Scatter, in a Skype conversation. Craft trumps realism every time, he continues: “If those aspects are all operating in tandem, you have this incredible latitude to create genuinely new kinds of worlds that have their own logic.”

Porter [LEFT, AT COMPUTER] and James George [LEFT, CENTRE] Scatter’s technical director, work in a field known as computational photography, which uses lidar-like motion capture. Scatter’s DepthKit, a hardware and software toolkit that allows users to attach a Microsoft Kinect (the motion-sensing input device developed for the Xbox 360 and Xbox One) to a digital SLR camera in order to shoot volumetric video, was used to capture interviews and performances for the third instalment of Toronto filmmaker Katerina Cizek’s interactive narrative documentary Highrise (National Film Board of Canada) and for the New York Times’ multimedia feature about the Kronos Quartet, Inside The Quartet. With DepthKit, what you film is fluid: the resulting 3-D footage can be manipulated—the camera angle of shots can even be changed in the post-production—in VFX software or a videogame engine. George explored this plasticity at length and to great effect in CLOUDS, a V.R. film he produced in collaboration with Jonathan Minard. The 2014 documentary conducted interviews with a who’s who of leading design luminaries and digital artists, including Bruce Sterling, Paola Antonelli, Karsten Schmidt, and Chris Sugrue, and transformed their likenesses into shimmering point clouds, also rendering them in TRON-like code world.

Scatter’s film

Blackout, currently in development, moves beyond point clouds into light, shadow, and space. It has a simple premise: a power outage interrupts a New York City subway commute and the viewer (as rider) scans the

thoughts and memories of the fellow passengers. To facilitate the ESP voyeurism, Scatter enlisted an eclectic cast of New Yorkers (a musician from New Zealand, a yoga instructor who trains Rikers Island inmates, a break-dance troupe famous for their rush-hour dance routines), filmed them, and imported the 3-D characters into a meticulously reconstructed model of an MTA R160 train and Morgan station from the L Line that connects Manhattan and Brooklyn.

Scatter enlisted Brooklyn creative-audio studio Antfood to collect an extensive archive of field recordings of train and environmental noises: the train in motion and at rest, its ventilation, the PA system, etc. “There is a pure channel of reality there that allows us to experiment with the artifice of the visual world and not lose our viewers,” George says. “Sound becomes the anchor to the real.” To do this, Antfood used a Neumann head and 3Dio ears to collect binaural recordings—“the poor man’s ambisonic,” Porter quips [SEE ABOVE]. So a pristine reproduction of the subway’s signature two-tone door chime captured via shotgun mike is a bait-and-switch, lending authenticity while coaxing the viewer to suspend their disbelief of the meshy and polygonal geometries of the film’s characters. “Also, from an interaction design perspective, people don’t yet know how to act in virtual reality,” George adds. “What is amazing about the physiology of spatial sound is that you can emit a sound in space based on the knowledge of where someone’s head is and its orientation and they’ll look in the direction of the trigger totally subconsciously.”

Format, narrative intent, and responses to existing media play a large part in determining how sound is mobilized in virtual reality. Equally important is how V.R. is experienced. Lately, it’s become quite social. Many established film festivals are now embracing the 360-degree experience. POP, a series of three weekend-long events showcasing various facets of the emerging medium, is produced by the Toronto International Film Festival’s (TIFF’s) Digital Studio. “We see it as a way to join the conversation and look at something that is transforming how we see the world through the moving image,” says TIFF Digital Studio director Jody Sugrue.

When I visited POP 01 in late June, several Samsung Gear viewing stations, each a cluster of chic pod chairs gathered around didactic wall texts, were arranged near the entrance—a medialounge. Most of the works, however, enjoyed spacious, quasi-installation setups that were meant to be enjoyed communally, often bolstered by projections and graphics. “We were very deliberate in leveraging the 3,400-square-foot HSBC gallery to build out an artistically rendered environment that felt as visually interesting as the stories we were showcasing,” Sugrue explained via email. Indeed, the noisy and crowded space—filled with groups watching their friends try V.R. on for size—evoked the stroboscopic arcades of yesteryear.

“People are targeting film festivals as a place to premiere work, which I think is smart from an industry or audience-development perspective,” says Scatter’s James George. But his company’s take on buzz-building at festivals is conflicted: a “technopolitical turf war” is brewing between mainstream cultural infrastructure and the disruptive streaming services that George calls “the would-be Netflixes or YouTubes of V.R..” His colleague Porter, however, is keeping an eye on the platforms doing game-engine-style distribution for mobile phones: “While there are very few highly engaged people watching this stuff on the Rift or the Vive [V.R. headsets], way more people are encountering V.R. on mobile phones, and that is deeply exciting to me.”

Whether in a packed festival hall or in the privacy of one’s living room, donning a V.R. headset seems destined to become part of our media vernacular. Porter’s comments about the phone as a potential endgame of V.R. are worth underscoring. We’re already snapping our phones into Samsung Gear V.R. headsets to serve as their displays; the contemporary iteration of the phone, a technology forged to transmit voice, has become, as well, a vehicle for how we see. Last fall at the Vanity Fair New Establishment summit in San Francisco, Facebook CEO Mark Zuckerberg called mobile the “first widely beloved computing platform,” lauded the “current golden era of video on the Web,” and cited V.R.’s ascendance as a natural progression of those developments. While his corporatized vision for the medium may be rooted in the distribution of banal V.R. snapshots documenting tropical vacations or children’s first steps, the sound technologies that reinforce that immersion will need to be extremely sophisticated.

Scatter technical director James George spent much of the early 2000s surrounded by composers and sound artists at the University of Washington, and he draws parallels between the ambitions that were prevalent within that community (many of them using the programming language Supercollider) with the promise of V.R.. “People were making large-scale sound installations that were hard to describe and even harder to experience,” he recalls. “I see V.R. as a way for that kind of intimate, powerful, spatial experience to be distributed around the world.” So, when rendered in this optimistic light, V.R. could be a Trojan horse for spatial audio and immersive sound. The technology is undoubtedly here and the conventions and standards are solidifying; it presents a platform for transporting us to an encounter or moment in time, for blowing up and reassembling how we think about and experience music, and even a means for abstracting and defamiliarizing our experience of the everyday. How we choose to engage those opportunities—and what they will sound like—remains to be seen.